Architecture

Atura is a AI assistant platform that enables deep integration into client systems. Every client environment is associated with an isolated Microsoft Azure resource group i.e. DEV, QA and PRODUCTION environments are created for each client. The following diagram outlines a high-level overview of Atura architecture.

Each AI assistant is fully customised to meet the client's needs and can be exposed over many channels - Facebook Messenger or Slack, for example - or over a JavaScript-based embedded webchat widget. At present the most commonly used channels are webchat widget and Facebook Messenger, although interest in WhatsApp is on the rise.

Technology Stack

At Atura we have a list of standard technologies we use for each aspect of an AI assistant, but this list is not immutable. Most can be substituted, with a little effort, if required by the nature of an individual AI assistant's design. Below is a list of each core area of the AI assistant, and the default tech that is associated with each one:

Microsoft Azure Components

- App Services - hosts the bot and service desk (if required).

- Function App - reads messages from the Service Bus and sends them to the database and conversation archive.

- Key Vault - stores keys and secrets securely.

- App Insights - stores logs and is used to assist when debugging issues.

- Cosmos DB - stores metadata associated with the bot.

- Table Store - stores end-user conversations.

- Service Bus Topics - receives and propagates chat messages flowing through the system, ensuring none get lost.

- Active Directory - manages consultants (service desk users) and facilitate login.

Dashboard Front-End

- ES6+ with Webpack and Babel - to use the latest JavaScript features.

- React - to structure the service desk front-end.

- Redux - for predictable state management.

- Adal Angular – for Azure Active Directory authentication.

Webchat Widget Front-End

- ES6+ with Webpack and Babel - to use the latest JavaScript features.

- BotFramework-DirectlineJS - to communicate with the Microsoft Bot Framework service.

Web Services

- ASP.NET MVC 5 and ASP.NET Web API 2

- C# v7.0

- .NET Framework 4.6.2.

AI assistant

- Microsoft Bot Framework v3.15 (Bot Builder)

Natural Language Processing (NLP)

- Microsoft LUIS (Language Understanding Intelligence Service)

- Microsoft QnA Maker - for QnA-style question and answer bot responses.

Hosting

An Azure resource group is created for each client environment i.e. one environment for QA, one environment for PRODUCTION etc. The resource group contains all the necessary Azure assets, except for an Azure Active Directory instance (of which there is only one per client) and the external cognitive services (such as Microsoft LUIS).

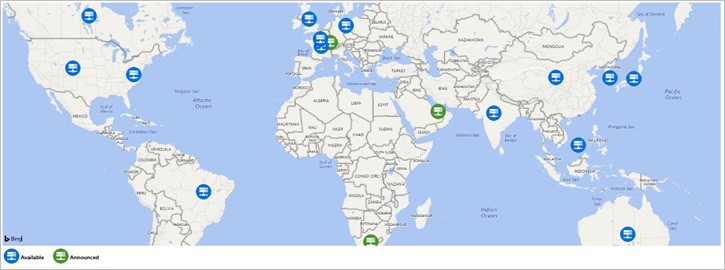

Azure resources are hosted in West Europe by default, but we can switch to host the client's AI Assistant in another region if required. All data is also stored in the same location that the AI Assistant is hosted in. South African hosting has been announced by Microsoft, but it is not yet available at the time of writing (August 2018).

This following map shows regions where bots may be hosted. Source: https://azuredatacentermap.azurewebsites.net/

Hosting On Premises

Due to the cloud-based nature of enterprise AI and NLP engines, the core of Atura must reside in the Microsoft Azure Cloud. Conversation data may however be stored on premises, if preferred.

Availability and Redundancy

An automated deployment pipeline is used to deploy to each environment. We ensure there is no downtime during deployments by using specific Azure features such as deployment slots.

The following figures are accurate at the time of writing (August 2018). Uptime SLA's are Microsoft Azure SLA's and are noted here for illustrative purposes only:

- Azure App Services - 99.95% uptime SLA.

- Azure Function App (running on App Service) - 99.95% uptime SLA.

- Azure Key Vault - Storage and failover to secondary region, 99.9% uptime SLA

- Azure Cosmos DB - Locally Redundant Storage, 99.99% uptime SLA.

- Azure Table Store - Locally Redundant Storage, 99.999999999% uptime SLA.

- Azure Service Bus (Topics) - 99.9% uptime SLA.

- Azure Active Directory - 99.9% uptime SLA.

References

Azure SLA summary: https://azure.microsoft.com/en-us/support/legal/sla/summary/

Azure Key Vault redundancy: https://docs.microsoft.com/en-us/azure/key-vault/key-vault-disaster-recovery-guidance

Azure Service Bus SLA: https://azure.microsoft.com/en-us/support/legal/sla/service-bus/v1_0/

Cosmos DB SLA details: https://azure.microsoft.com/en-us/support/legal/sla/cosmos-db/v1_1/

LRS (Locally Redundant Storage) details: https://docs.microsoft.com/en-us/azure/storage/common/storage-redundancy-lrs

Azure deployment slots: https://docs.microsoft.com/en-us/azure/app-service/web-sites-staged-publishing

Backup

Since Microsoft Azure stores multiple copies of all data, hardware failure is of no consequence. We do however store snapshots of data in the unlikely event that data is deleted or corrupted accidentally or maliciously.

-

Azure Table Store - Atura creates a backup of the conversation table daily, and stores snapshots for up to 30 days in a different Azure region as an additional level of redundancy in case of disaster at the original location.

- Azure Key Vault - Atura creates a backup of the Key Vault daily, and stores snapshots up to 30 days.

- Cosmos DB - automatically backed up by Microsoft Azure every four hours. Snapshots are kept for 30 days in case of deletion.

- App Services, Function App etc. - can be redeployed from the ground up whenever necessary, so no backup is required.

References

Cosmos DB backup policy: https://docs.microsoft.com/en-us/azure/cosmos-db/online-backup-and-restore

Extensibility

The highlighted blocks in the diagram below indicate where components may be swapped out with alternative implementations.

- Atura Webchat Widget - the chat front-end may be completely customised and integrated with a client's web pages, depending on individual project requirements.

- Atura Service Desk - instead of using the Atura Service Desk module, we can integrate with an external CMS system to handle consultant conversations, agent interactions and ticketing.

- Azure Active Directory - Atura can integrate with a client's on-premises Azure Active Directory by using Azure AD Connect Sync.

- Interested Message Consumers - since messages are published to a service bus, an 'interested party' may subscribe to the bus to receive the messages and process as they wish e.g. archive in an external system. We will provide only clients (and no-one else) with read-only access to their specific bot messages.

- Azure Table Store - the Azure Table Store may be replaced with another type of data store, a SQL Server instance or Cosmos DB database for example.

- Microsoft LUIS - any other NLP engine that can be accessed with an API Key and Secret may be used as a substitute for LUIS, for example wit.ai or IBM Watson Conversation.

- Microsoft QnA Maker - as with Microsoft LUIS, the QnA Maker may be replaced by any NLP-style API that accepts a key and secret to connect.

Message Flow

The diagram below illustrates how an end user's text message flows through the system.

Communication always happens over encrypted channels to ensure the security of the information being transmitted. See our SECURITY section for more details.

Starting with a message from the end user, the message is sent from the end user's device to the Bot Framework. From there it is sent to the client-specific bot running on Microsoft Azure. The bot processes the message, making use of the associated NLP engine or decision tree code where applicable, and returns a response to the Bot Framework. The turnaround time on this process is a maximum of 15 seconds. If a response is not returned within the 15 second window, the chat channel will render an error message (which will differ depending on the channel).

As a secondary task, aside from the core message flow, the message is transmitted from the AI assistant to the service bus for archiving and potential consultant takeover via the Service Desk.

Security

See our SECURITY section for more details.

Integration

At Atura, we place a strong emphasis on integration. We believe that really powerful and useful AI assistants should be intelligently integrated with 3rd party systems to provide a rich user experience. The best AI assistants are able to communicate with a client's back-end systems allowing the end users to really interact with the business in a deep and meaningful way.

The following diagram shows integration points where data can be exchanged with external client systems.

The following sections explain potential integration point capabilities in more detail.

Client Back-End Systems

Providing that there is a web service endpoint exposed to the internet, an AI assistant can communicate with any client's back-end system. Typically, calls are made over TLS to REST-style endpoints using APIKey and Secret (or JWT) token exchange for security. Many other approaches, such as SOAP or TCP, are possible though depending on the situation. For example:

- An end user can initiate a chat login that uses their mobile phone number (which is stored in the client's system) and is driven by the client's own two-factor authentication system.

- An end user can complete a transaction to transfer funds between accounts via chat, the details of which are retrieved from the client's own system.

Service Bus Subscription

Chat messages flow through the system via an Azure Service Bus Topic. Any 'interested parties' - back end client systems for example - can subscribe to the Topic to receive all messages and process and respond as necessary. We provide only clients (and no-one else) with read-only access to their specific messages. For example:

- All chat conversations for logged-in end users could be read and sent to the client's proprietary CRM system so that the client's service desk agents are aware of user interactions with the AI assistant.

Agent API

Client systems may also hook in to the standard "agent takeover" process by calling web services exposed by Atura's Service Desk component. This is useful when a client's back-end system is used in addition to, or instead of, the Atura Service Desk. We expose two services to facilitate changes to the process:

- /sendAgentToUserMessage - sends a message from an agent/consultant to a chat user while the user is in "needs assistance" mode.

- /takeOverComplete - signals that agent/consultant takeover is complete and that the AI assistant can resume responding to user input.

Calls to the services only work over HTTPS and must contain a pre-configured APIKey and Secret. To ensure security.

Webchat Widget

If necessary, client integration can be achieved by linking an AI assistant directly with a client via the Bot Framework's DirectLine channel instead of using Atura's embeddable webchat widget. This allows a custom chat front-end to be built, which is useful in situations where a client's desired user interface is very different from a typical chat appearance.

Scalability

Atura was architected with cost and scalability in mind, which makes Microsoft's Azure the perfect host for our AI assistants. By default, we initially deploy AI assistants on smaller, more cost-effective Azure components. But as the need arises (increasing system pressure due to an expanded user base for example) they can be scaled up.

App Services (Bot and Service Desk Apps)

The Bot and Service Desk apps are hosted on Azure App Services. These services can be scaled up and scaled out as required:

- Scaled up - App Service Plans can be upgraded to have more RAM, CPU and sockets for better performance per instance.

- Scaled out - additional instances can be created automatically based on CPU load (and other metrics), meaning the assistant or service desk can scale out as the need arises.

Service Bus

Azure Service Bus is built to be fast and scalable. By default, we create a Standard tier service bus, which has variable throughput. If necessary, the service bus can be upgraded to Premium tier which allows extremely fast and consistent throughput.

At the time of writing, no throughput SLA was available from Microsoft. Tests available on the Microsoft blog show it has been possible to get throughput well in excess of 10,000 messages per second. Follow this link to read the article: https://blogs.msdn.microsoft.com/servicebus/2016/07/18/premium-messaging-how-fast-is-it/

Table Store

Conversation messages are stored in Azure Table Store by default. A single Table Store instance can store 500 TiB (Tebibytes) of data, and handle 20,000 requests per second - more than is usually required by any reasonable application.

However, if absolutely necessary for a specific project, we can scale out to multiple table store instances by partitioning on a subset of Conversation-Ids. This will theoretically achieve an infinite amount of storage, which is only limited by budget.

Detailed Azure Table Store scalability targets are documented here: https://docs.microsoft.com/en-us/azure/storage/common/storage-scalability-targets